The business processes for the production of official statistics are described in the Generic Statistical Business Process Model (GSBPM, UNECE 2025).

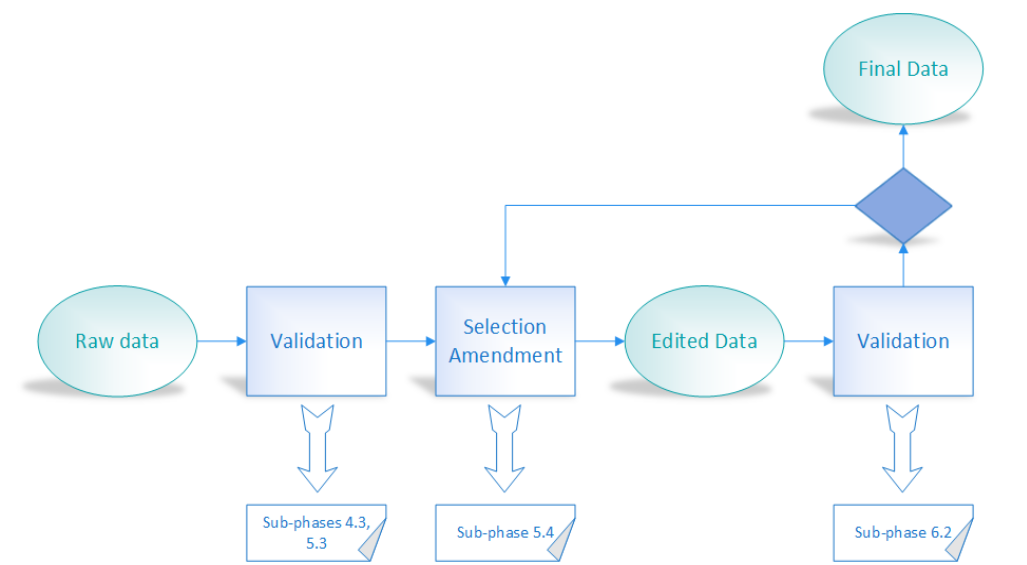

The schema illustrated in the GSBPM shows that data validation is performed in different phases of a production process. The phases where validation is performed are as follows:

GSBPM: sub-phase 2.5

The first phase in which data validation is introduced is the ‘design’ phase, more specifically sub-phase 2.5, ‘Design processing and analysis’. The description in GSBPM is as follows:

‘This sub-process designs the statistical processing methodology to be applied during the ‘Process’ and ‘Analyse’ phases. This can include specification of routines for coding, editing, imputing, estimating, integrating, validating and finalizing data sets.’

This is related to the design of a validation procedure, or more specifically, a set of validation procedures consisting of a validation plan.

GSBPM: sub-phase 4.3

The first sub-phase of GSBPM in which validation checks are performed is 4.3 ‘Run collection’ (as part of the ‘Collect’ phase). As described in the GSBPM document, checks deal with the formal aspects of data and not the content:

‘Some basic validation of the structure and integrity of the information received may take place within this sub-process, e.g. checking that files are in the right format and contain the expected fields. All validation of the content takes place in the Process phase.’

GSBPM: sub-phase 5.3

In the process phase, sub-phase 5.3 explicitly refers to validation, in fact it is called ‘Review and validate’. The description given in the GSBPM document is as follows:

‘This sub-process examines data to try to identify potential problems, errors and discrepancies such as outliers, item non-response and miscoding. It can also be referred to as input data validation. It may be run iteratively, validating data against predefined edit rules, usually in a set order. It may flag data for automatic or manual inspection or editing. Reviewing and validating can apply to data from any type of source, before and after integration. Whilst validation is treated as part of the ‘Process’ phase, in practice, some elements of validation may occur alongside collection activities, particularly for modes such as web collection. Whilst this sub-process is concerned with detection of actual or potential errors, any correction activities that actually change the data are done in sub-process 5.4 (edit & impute)’.

Several observations:

- The term ‘input data validation’ suggests an order in the production process. The term and the idea can be used in the manual.

- Validation may occur alongside collection activities;

- A distinction is made between validation and editing, and it is in the action of ‘correction’ that is performed in the editing sub-phase, while validation only expresses whether there is (potentially) an error or not. The relationship between validation and data editing will be discussed later on; and

- Even if an error is to be corrected in the editing sub-phase, in some cases errors may reveal a need to improve the design, build or collection phase of GSBPM.

GSBPM: sub-phase 6.2

The last sub-phase is 6.2 (‘Validate outputs’):

‘This sub-process is where statisticians validate the quality of the outputs produced, in accordance with a general quality framework and with expectations. This sub-process also includes activities involved with the gathering of intelligence, with the cumulative effect of building up a body of knowledge about a specific statistical domain. This knowledge is then applied to the current collection, in the current environment, to identify any divergence from expectations and to allow informed analyses. Validation activities can include:

- [checking that the population coverage and response rates are as required]

- comparing the statistics with previous cycles (if applicable)

- [checking that the associated metadata and paradata (process metadata) are present and in line with expectations]

- confronting the statistics against other relevant data (both internal and external)

- investigating inconsistencies in the statistics;

- performing macro editing

- validating the statistics against expectations and domain intelligence

The checks that are not usually considered as a part of a ‘data validation’ procedure (i.e., the first and the third item where emphasis is not on data) are in brackets.